Introduction

The latest version of the game Cyberpunk 2077 has successfully implemented a strategy where all its threads are bound to the Performance Cores (P-Cores) of Intel CPUs. This optimization has sparked curiosity from me: why and how?

My hypothesis is that the separation of the L2 cache between E-Cores and P-Cores can introduce significant latency when switching between them. This latency can be particularly slow in certain CPU models, leading to performance degradation. By creating separate thread pools for E-Cores and P-Cores and strategically assigning threads to specific core types, can substantial improve performance.

To investigate this hypothesis and explore the impact of context switching between P-Cores and E-Cores, I developed a benchmark, and will discuss the precautions taken during its development, and analyze the results obtained from running the benchmark on an Intel CPU with both P-Cores and E-Cores.

Benchmark Code

The benchmark program is written in C++ and utilizes the Windows API to retrieve processor information and set thread affinity. Here's the code:

#include <cstdio>

#include <thread>

#include <vector>

#include <windows.h>

#include <chrono>

#include <cmath>

#include <random>

#include <string>

const int NUM_ITERATIONS = 1000000;

void benchmarkTask(std::vector<DWORD_PTR> pCoreMasks, std::vector<DWORD_PTR> eCoreMasks, bool pCoreOnly, bool eCoreOnly) {

std::random_device rd;

std::mt19937 gen(rd());

std::uniform_int_distribution<> pCoreDistribution(0, pCoreMasks.size() - 1);

std::uniform_int_distribution<> eCoreDistribution(0, eCoreMasks.size() - 1);

for (int i = 0; i < NUM_ITERATIONS; ++i) {

if (pCoreOnly && !pCoreMasks.empty()) {

// Set the thread affinity to a random P-Core

DWORD_PTR mask = pCoreMasks[pCoreDistribution(gen)];

SetThreadAffinityMask(GetCurrentThread(), mask);

} else if (eCoreOnly && !eCoreMasks.empty()) {

// Set the thread affinity to a random E-Core

DWORD_PTR mask = eCoreMasks[eCoreDistribution(gen)];

SetThreadAffinityMask(GetCurrentThread(), mask);

} else {

// Randomly select a P-Core or E-Core

bool usePCore = (i % 2 == 0);

if (usePCore && !pCoreMasks.empty()) {

// Set the thread affinity to a random P-Core

DWORD_PTR mask = pCoreMasks[pCoreDistribution(gen)];

SetThreadAffinityMask(GetCurrentThread(), mask);

} else if (!usePCore && !eCoreMasks.empty()) {

// Set the thread affinity to a random E-Core

DWORD_PTR mask = eCoreMasks[eCoreDistribution(gen)];

SetThreadAffinityMask(GetCurrentThread(), mask);

}

}

// Perform some work

double result = 0.0;

for (int j = 0; j < 1000; ++j) {

result += std::sin(j) * std::cos(j);

}

}

}

int main(int argc, char* argv[]) {

bool pCoreOnly = false;

bool eCoreOnly = false;

// Parse command-line arguments

for (int i = 1; i < argc; ++i) {

std::string arg = argv[i];

if (arg == "--p-core-only") {

pCoreOnly = true;

} else if (arg == "--e-core-only") {

eCoreOnly = true;

}

}

DWORD returnedLength = 0;

BOOL ret = GetLogicalProcessorInformationEx(RelationProcessorCore, nullptr, &returnedLength);

if (ret == FALSE && GetLastError() == ERROR_INSUFFICIENT_BUFFER) {

std::vector<char> buffer(returnedLength);

SYSTEM_LOGICAL_PROCESSOR_INFORMATION_EX* processorInfo = reinterpret_cast<SYSTEM_LOGICAL_PROCESSOR_INFORMATION_EX*>(buffer.data());

ret = GetLogicalProcessorInformationEx(RelationProcessorCore, processorInfo, &returnedLength);

if (ret) {

std::vector<DWORD_PTR> pCoreMasks;

std::vector<DWORD_PTR> eCoreMasks;

char* ptr = buffer.data();

while (ptr < buffer.data() + returnedLength) {

SYSTEM_LOGICAL_PROCESSOR_INFORMATION_EX* info = reinterpret_cast<SYSTEM_LOGICAL_PROCESSOR_INFORMATION_EX*>(ptr);

if (info->Relationship == RelationProcessorCore) {

if (info->Processor.EfficiencyClass == 0 || info->Processor.EfficiencyClass == 2) {

pCoreMasks.push_back(info->Processor.GroupMask[0].Mask);

} else if (info->Processor.EfficiencyClass == 1) {

eCoreMasks.push_back(info->Processor.GroupMask[0].Mask);

}

}

ptr += info->Size;

}

printf("Number of P-Cores: %zu\n", pCoreMasks.size());

printf("Number of E-Cores: %zu\n", eCoreMasks.size());

// Create multiple benchmark threads

const int numThreads = 4;

std::vector<std::thread> threads;

auto start = std::chrono::high_resolution_clock::now();

for (int i = 0; i < numThreads; ++i) {

threads.emplace_back(benchmarkTask, pCoreMasks, eCoreMasks, pCoreOnly, eCoreOnly);

}

// Wait for all threads to finish

for (auto& thread : threads) {

thread.join();

}

auto end = std::chrono::high_resolution_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(end - start).count();

printf("Benchmark completed in %lld milliseconds.\n", duration);

}

}

return 0;

}Explanation

- It retrieves processor information using the

GetLogicalProcessorInformationExfunction to determine the number of P-Cores and E-Cores available on the system. - It then creates a specified number of benchmark threads (

numThreads) and assigns each thread to abenchmarkTaskfunction. - Inside the

benchmarkTask, it randomly selects a P-Core or E-Core for each iteration and sets the thread affinity accordingly using SetThreadAffinityMask. The selection is based on command-line flags:--p-core-onlyfor running on P-Cores only,--e-core-onlyfor running on E-Cores only, or no flag for forcing context switching between P-Cores and E-Cores. - Each iteration of the

benchmarkTaskperforms some CPU-intensive work, such as calculating the sum of sine and cosine values.

The program measures the total execution time of the benchmark usingstd::chrono::high_resolution_clockand displays it in milliseconds.

Precautions

Core Arrangement: Initially, the program assumed that P-Cores and E-Cores were arranged in a specific order, which was logically(but not practically) incorrect. It is crucial to retrieve the affinity masks from the processor information using the GetLogicalProcessorInformationEx function and set thread affinity correctly, regardless of the CPU model or core arrangement. This ensures that the benchmark accurately targets different core types and CPU models.

Context Switching Location: There was a discussion about whether the context switching should happen within the benchmarkTask function or when creating the benchmark threads. It is essential to ensure that the context switching occurs inside the benchmarkTask to accurately measure the impact of switching between P-Cores and E-Cores. Performing context switching outside the task could lead to misleading results.

Results

Running the benchmark program on an Intel CPU with both P-Cores and E-Cores yielded the following results:

P-Core Only: The benchmark ran the fastest when all threads were bound to P-Cores only.

E-Core Only: The benchmark ran slower when all threads were bound to E-Cores only.

Mixed Mode: The benchmark exhibited the slowest performance when forcing context switching between P-Cores and E-Cores.

These results align with the hypothesis that the separation of the L2 cache between E-Cores and P-Cores can introduce significant latency when switching between them.

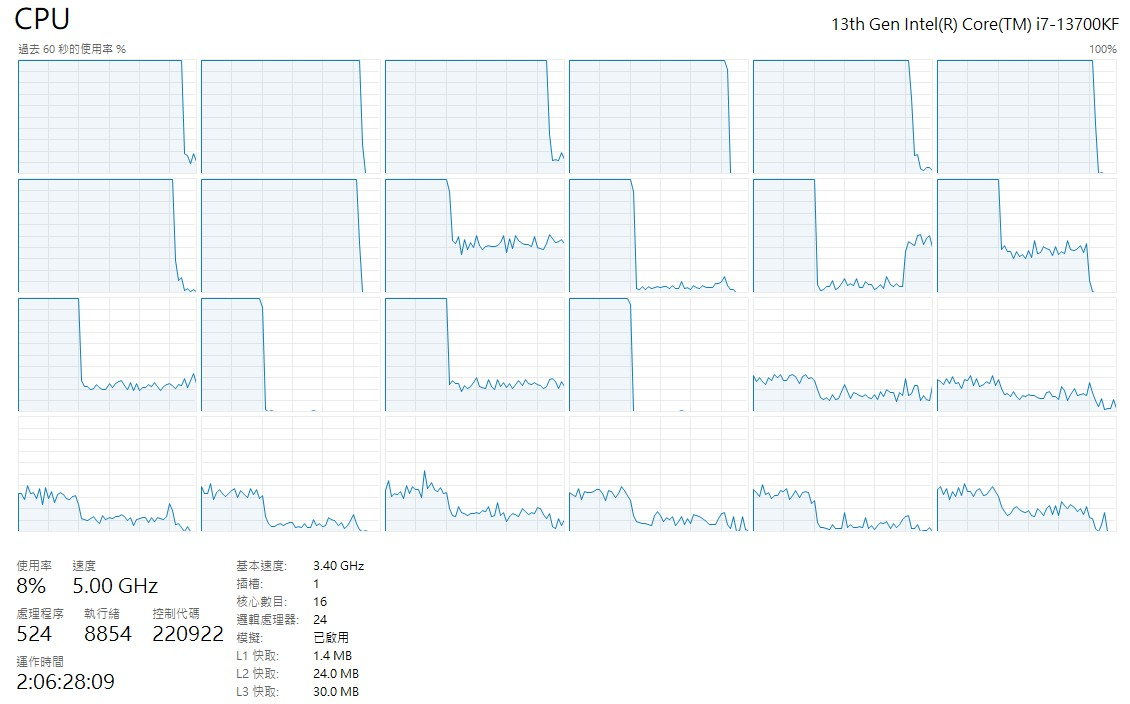

Here is my result on my i7 13700KF CPU (128GB DDR4 3200 Ram, Win 11)

.\bench.exe

Number of P-Cores: 8

Number of E-Cores: 8

Benchmark completed in 11180 milliseconds.

.\bench.exe --p-core-only

Number of P-Cores: 8

Number of E-Cores: 8

Benchmark completed in 7261 milliseconds.

.\bench.exe --e-core-only

Number of P-Cores: 8

Number of E-Cores: 8

Benchmark completed in 7447 milliseconds.Conclusion

In short, under heavy context switching, P-Core only > E-Core Only > Mixed mode.

I think this may have a even large impact on seperated big.little CPUs like Quadcomm SnapDragon 810.

I hope this experiment serves as a starting point for investigating and optimizing softwares for heterogeneous CPU architectures. By strategically assigning threads to specific core types and minimizing context switching between E-Cores and P-Cores, we potentially improve performance and leverage the full potential of modern desktop/mobile CPUs (even Intel is planned to drop E-Core soon).

Appendix

gist: https://gist.github.com/abbychau/8ac93aa252be9d37b7a69d93e09ab004

main.cpp performance(by multiplying iterations for 100):